"I really wanted to learn SEO and SEOLAXY was literally the best source out there. It is the most thorough and useful SEO course."

Webflow SEO & Strategist

★★★★★"All lessons are meticulously prepared for people at all knowledge levels, from beginners to experienced SEOs"

Junior Ecommerce SEO & AI Specialist

★★★★★"When I discovered the depth of SEO and the way you explained it so clearly, I decided that I wanted to become an SEO specialist."

Junior Ecommerce SEO & AI Specialist

★★★★★All SEOLAXY School Lessons

The complete SEOLAXY School course is free and available on YouTube. It gives beginners everything they need to become Junior Ecommerce SEO and AI Specialists, one of the most in demand entry level roles today. After finishing, another free advanced course awaits so you can take your next career step with confidence. Start with the first lesson and see why this skillset is paid so well.

1) What Is Ecommerce SEO and How You Can Profit from It

2) Which Ecommerce SEO Ranking Factors REALLY Matter?

3) The BIGGEST Offpage SEO Ranking Factor Exposed!

4) The BIGGEST Google Onpage SEO Factor Revealed!

5) The Fastest Way to Get a Shop Indexed in Google

6) In THIS Cases Not Indexed URLs Aren't ERRORS!

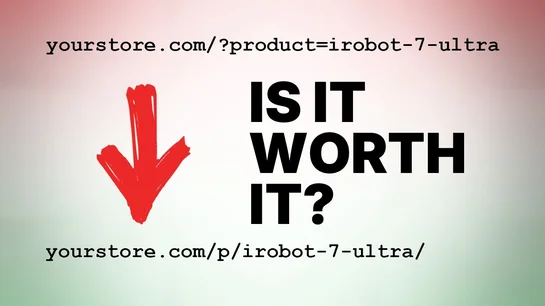

7) THIS Slash Can RUIN Years of SEO Effort!

8) Get 50% More Google Traffic with This 1-Min Hack!

9) SEO Friendly URLs Are Overrated!?

10) URL Rewriting May Ruin Your SEO Rankings!

11) Do This to Make Your Shop Finally Rank on Google!